Monitoring and Evaluation Guidance

1.

Introduction

This guidance sets out the LEP’s approach for monitoring and evaluation and provides

important principles for effective evaluation. It’s based on central government guidance and

supersedes the Monitoring and Evaluation Framework which was written for the Growth

Deal. This guidance is delivered through a Programme of Evaluation.

The purpose of this guidance is to support the monitoring and evaluation of projects across

the LEP’s portfolio of investments. This guidance is broad in scope in order to cover all

investments funded through the LEP, including but not limited to:

• Growth Deal Funding

• Growing Places Fund

• Growing Business Fund and associated grant schemes

• New Anglia Capital

• Enterprise Zone Accelerator Fund

• Eastern Agri-tech Growth Initiative

• Voluntary Challenge Fund

• European funded programmes

The purpose of monitoring and evaluation is to provide the LEP and its partners with real

and proportionate intelligence to understand:

(a) Progress being made towards delivery of the Economic Strategy for Norfolk and

Suffolk;

(b) The impact our programmes / projects are having on our ambitions to increase

productivity, deliver more houses, new businesses and create more jobs;

(c) Enable us to test delivery models against effectiveness and determine whether a

project or intervention is delivering as planned and whether resources are being

effectively used; or to justify reinvestment or resource savings.

(d) To identify which activities / programmes have had the most impact and lessons for

use in delivering future projects and programmes.

(e) To justify future activity and enable further funding opportunities.

(f)

To determine whether assumptions made were the right assumptions and whether

the project / intervention delivered and met the expectations and needs of the

business community.

(g) Provide Government with robust and timely information and analysis to sustain

confidence in our ability to deliver projects/programmes and/or achieve our

objectives.

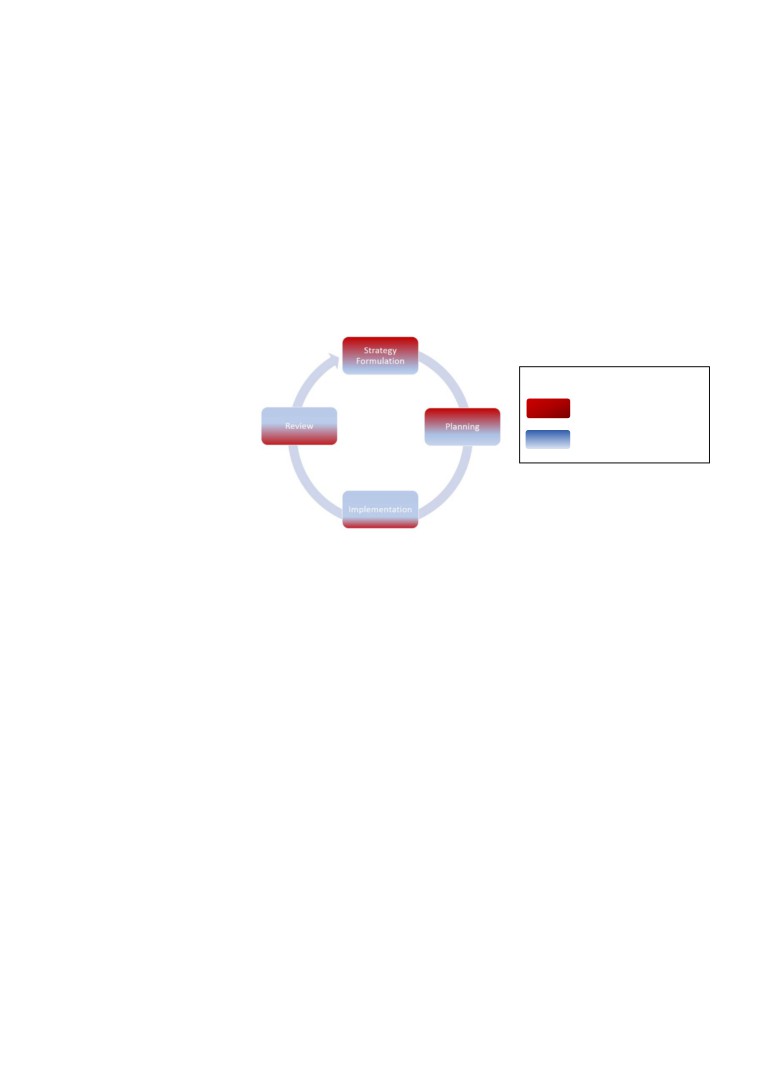

The LEP has developed a Strategic Management Cycle to show the important links between

strategy development, planning, implementation and review/evaluation (figure 1).

Strategy Formulation

Development of the LEP’s ambitions and intent (i.e. what the LEP and partners want to

achieve and by when) which are expressed as a range of specific high-level objectives.

1

Revised 01/11/2019

Planning

Determining how to achieve the LEP’s ambitions and supporting objectives, identifying

resources required and developing programmes/projects/interventions which will help realise

these ambitions, incorporating monitoring and evaluation criteria.

Implementation

Delivery of LEP programmes/projects/interventions, working with partners and monitoring

and reporting progress to the LEP Board, LEP partners and Government.

Review

Evaluation of LEP programmes/projects/interventions, determining their effectiveness and

measuring outcomes and impacts to determine whether the anticipated benefits have been

realised.

Key:

Strategy team

Programmes Team

Figure 1: The Strategy Management Cycle

Good-quality evaluations play important roles in setting and delivery of the LEP’s ambitions

and objectives, demonstrating accountability and providing evidence for independent

scrutiny processes. They also contribute valuable knowledge towards our evidence base,

feeding into future strategy development and occupying a crucial role in determining our

future projects.

Not evaluating, or evaluating poorly, means that the LEP will not be able to provide

meaningful evidence in support of any claims it makes about the effectiveness of a

programme or project intervention; effectively rendering any such claims unfounded.

Evaluations should be proportionate to spend and based on what is possible as well as

being tailored to the programme, intervention or project being considered, and the types of

questions it is hoped to answer.

Considering evaluation early in the development of a programme or intervention will enable

the most appropriate type of evaluation to be identified and adopted.

2.

What is a good evaluation?

A good evaluation is an objective process which provides an unbiased assessment of a

project’s performance.

Good evaluations should always provide information which could enable less-effective

programmes or projects to be improved, support the reinvestment of resources in other

activities, or simply save money. More generally, evaluations generate valuable information

2

Revised 01/11/2019

which can be used in a variety of ways and are a powerful tool to help inform future

strategies.

Good evaluation, and the reliable evidence it generates, provides direct benefits in terms of

performance and effectiveness of the LEP and its partners; supports democratic

accountability; and is key to achieving appropriate returns from Government funding.

All evaluations should be grounded in the availability of high-quality data.

3.

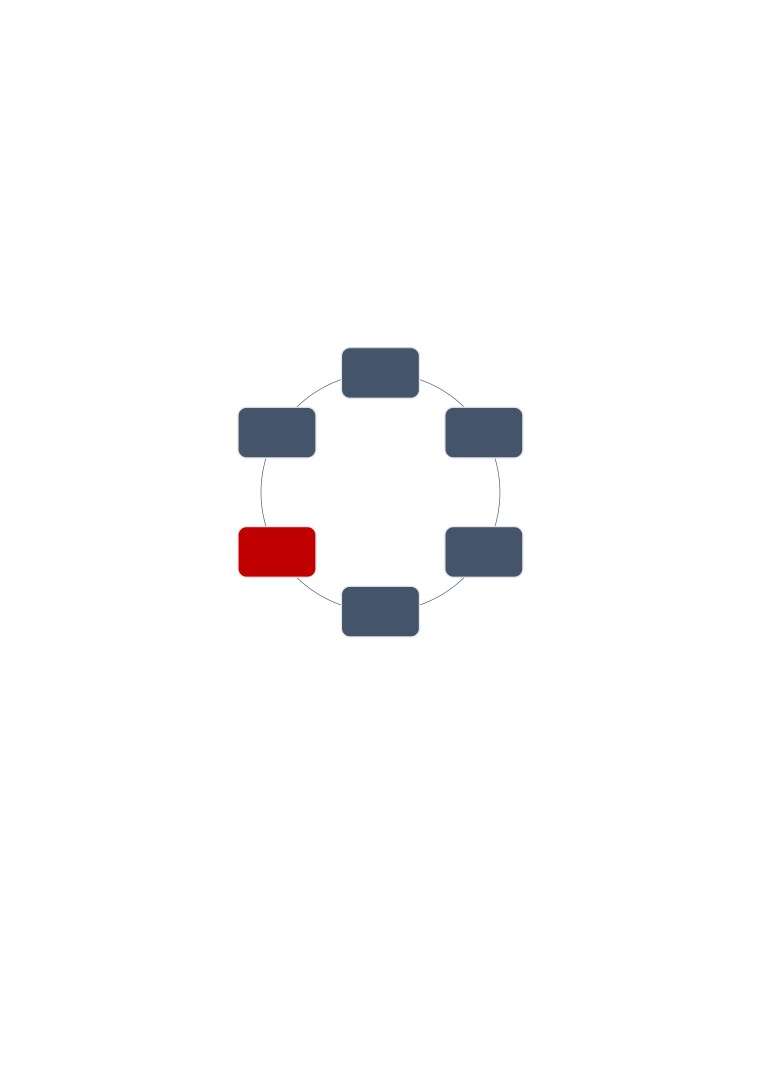

Evaluation Approach

Monitoring and evaluation should be incorporated into the development and planning of a

project from the start and agreed through Heads of Terms. This is important to ensure

successful implementation and the responsible, transparent management of funding and

resources. Monitoring and evaluation is a process of continuous improvement which is

illustrated through the ROAMEF cycle, produced in the Treasury’s Green Book, Appraisal

and Evaluation in Central Government.

Rationale

Feedback

Objectives

Evaluation

Appraisal

Monitoring

Figure 2: ROAMEF Cycle

• Rationale is the reasoning behind why we have chosen to do a particular project

• Objectives are those which we expect the project to achieve

• Appraisal takes place after the rationale and objectives of the project have been

formulated. The appraisal determines whether the project fulfils our criteria on

deliverability, additionality and value for money and whether it fits with the ambitions

and objectives outlined in the Economic Strategy. The appraisal may be carried out

by an independent third-party to ensure fairness and transparency.

• Monitoring checks progress against planned targets and can be defined as the

formal reporting and evidencing that spend and outputs are being successfully

delivered, and milestones met. Monitoring is undertaken by the LEP’s programmes

team.

• Evaluation assesses the effectiveness of a project or intervention both during and

after implementation. It measures outcomes and impacts to determine whether the

3

Revised 01/11/2019

anticipated benefits have been realised. It reviews the approach to determine

whether it was the right approach and whether we could do things better in future.

Evaluations can be carried out by the LEP or an independent third party.

• Feedback - using and disseminating the evaluation findings is critical for influencing

decision making and for determining strategy planning.

4.

Types of Evaluation

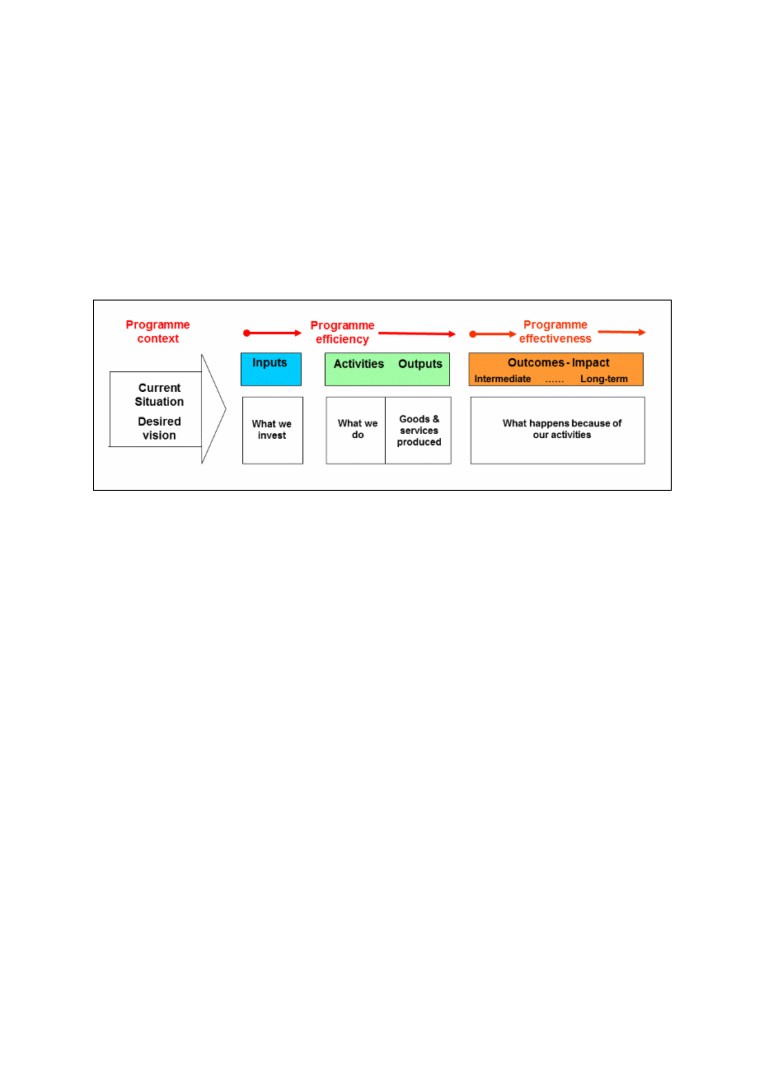

The LEP employs a combination of three types of evaluation approach - process, impact

and economic - to assess the outcomes of the programme / project (i.e. how it was

delivered, what difference it made, whether it could be improved and whether the benefits

justified the costs) - see figure 3. Appendix 1 sets out the differences between these three

types of evaluation.

Figure 3: Different Types of Evaluation

To find out why a project operated in the way it did, and had the effect it had, requires a

combination of all of these types of evaluation and these need to be designed and planned

at the same time.

Before deciding which evaluation approach is appropriate, a wide range of factors need to

be considered, including:

• the nature and scale of the project, complexity, innovation, its implementation and

future direction;

• the objectives of the evaluation and the types of questions it would ideally answer;

• the timing of key policy decisions and the information on which they need to be based;

• the types of impacts which are expected, the timescales over which they might occur,

and the availability of information and data relating to them and other aspects of the

project;

• the time and resources available for the evaluation.

4

Revised 01/11/2019

5.

Identifying the right type of evaluation for the project

The level of evaluation should be based on what will achieve the most comprehensive outcome,

although the aspiration is that programmes should be evaluated counterfactually (what would

have happened to the outcomes in the absence of the intervention). Where this is truly not

feasible, nor value for money, lower thresholds of evaluation design can still be meaningful.

Evaluation types (and/or methods) are determined by the nature of the questions they attempt to

answer. It is important to begin an evaluation by being clear on what is wanted from the

evaluation. A logic model helps by providing a project outline that helps develop different

measures of success that track the project’s development and impact over time.

The logic model (figure 4) should be used for each evaluation to illustrate the key elements

the project / intervention contributed to the outcome of the project.

Figure 4 - Logic Model

The various elements of the logical model are explained below:

• Inputs - (resources and/or infrastructure) - what raw materials will be used to deliver

the project/intervention (inputs can also include constraints on the programme, such

as regulations or funding gaps, which are barriers to the project’s objectives)?

• Activities - what does the intervention need to do with its resources to direct the

course of change?

• Outputs - jobs created, match funding, new houses, etc.

• Outcomes (or impacts) - what kinds of changes came about as a direct or indirect

result of the activities?

•

‘Impact site’ this is the area which has been positively influenced by some kind of

change due to the project. This can be a specific location or a wider area.

It is therefore important to have a clear idea of the questions that need to be addressed and

the required type(s) of evaluation at the early stage in developing the programme or

intervention to help inform the design of the evaluation and the resources required.

The following provides information on the different types of evaluation used within the LEP:

Process Evaluation: How was the project delivered?

Process evaluations use a variety of qualitative and quantitative data to explore how the

project was implemented; assessing the actual processes employed, often with

assessments of the effectiveness from individuals involved or affected by implementation of

the project.

5

Revised 01/11/2019

There is an overlap between the types of questions answered by process evaluation and

those addressed through impact evaluation. Programme / project delivery can be described

in terms of output quantities, such as jobs and new businesses created. But these are also

measurable outcomes of the project. This means that process evaluations often need to be

designed with the objectives and data needs of impact evaluations in mind and vice versa.

Using and planning the two types of evaluation together will help to ensure that any such

interdependencies are accounted for.

Impact Evaluation: What difference did the project make?

Impact evaluation uses quantitative data to determine whether a project was effective in

meeting its objectives. This involves focusing on the outcomes of the project.

A good impact evaluation recognises that most outcomes are affected by a range of factors,

not just the project itself. To assess whether the project was responsible for the change, we

need to estimate what would have happened if the project had not gone ahead (i.e.

‘business as usual’). To help this type of evaluation, the business case would need to

include these estimates and forecast the difference the project could make.

A reliable impact evaluation may demonstrate and quantify the outcomes generated by a

project, but on its own it will not be able to show whether those outcomes justified that

project. Economic evaluation considers such issues, including whether the benefits of the

project have outweighed the costs.

Economic evaluation: Did the benefits justify the costs?

Economic evaluation involves calculating the economic costs associated with a project and

translating its estimated impacts into economic terms to provide an understanding of the

return on investments or value for money. There are different types of economic evaluation,

including:

• Cost-effectiveness analysis produces an estimate of “cost per unit of outcome” (e.g.

cost per additional individual placed in employment), by taking the costs of

implementing and delivering the project and relating this to the total quantity of

outcome generated.

• Cost-benefit analysis examines the overall justification for a project by quantifying as

many of the costs and benefits as possible, including wider social and environmental

impacts (such as air pollution, traffic accidents etc) where feasible. It goes further

than the cost-effectiveness analysis by placing a monetary value on the changes in

outcomes as well (e.g. the value of placing an additional individual in employment).

This means that the cost-benefit analysis can also compare projects which have

quite different outcomes.

• Net Treasury Gain can be described as ‘for every £1 spent, an additional £X is

generated for the economy’. This could be used as leverage for Government funding

- demonstrating that a project generates more money for the Government than it

costs to run (i.e. positive net treasury gain).

Economic approaches value inputs and outcomes in different ways. It is therefore important

that the needs of any economic evaluation are considered in the development and planning

of a project at the start. Otherwise, an evaluation may generate information which, although

highly interesting and valid, is not compatible with a cost-benefit framework, making it very

difficult to undertake an economic evaluation.

6

Revised 01/11/2019

6.

Key Evaluation Questions

Having a set of Key Evaluation Questions (KEQs) makes it easier to decide what data to

collect, how to analyse it and how to report it. A maximum of 5 - 7 main questions will be

sufficient. It may also be useful to have some more specific questions under the KEQs.

KEQs are open questions, broad enough to be broken down, but specific enough to be

useful in guiding you through the evaluation. They are good at reminding us to explore why

something occurred, what worked for whom, and so on. KEQs should lead to useful,

credible, evaluation and are not the same as a question in a survey. Below are some

examples of KEQs:

Source: Regeneris Consulting

7.

Overview of Evaluation within the LEP

A number of steps need to be considered when designing and managing an evaluation

which are detailed in Appendix 2. In broad terms, monitoring and evaluation consists of:

• Annual strategic review - reflecting on progress against the Economic Strategy,

evidencing action taken to deliver against ambitions. A specific focus of the review

will be to evidence the strategic ‘added value’ achieved (e.g. the LEP working in

collaboration with partners), as well as securing direct investment. The review will

also reflect on overall economic performance and progress towards the economic

indicators.

• Monitoring of our Investment Portfolio - The LEP continues to monitor the

ongoing performance and progress of projects within its portfolio. The aim of which is

to:

o Understand progress and performance of investments, identify slippage and

risks to delivery; or to justify reinvestment or resource savings.

o Collect data that will support subsequent evaluation / impact studies;

o Report progress back to Central Government / funders.

The metrics used to monitor each of our programme investments is set out in our

LEP Programme Outputs - User Guide and Technical Annexe.

7

Revised 01/11/2019

• Evaluating Individual Investments - in addition to basic monitoring requirements,

each investment project will be required to produce a post-project evaluation report.

An Evaluation Programme has been developed which monitors the LEP’s portfolio of

investments. It identifies what will be evaluated; the timings of the evaluation; who

the person is who is responsible for designing the evaluation; and who the evaluation

will report back to. It also monitors the data being collected and whether the

evaluations will be conducted in-house or externally commissioned.

• Meta-Evaluation - the LEP may conduct strategic evaluations across its portfolio to

understand the impact on a specific theme or group of projects; learn lessons from

pilot/exploratory projects and build the evidence base on what works and why.

The exact nature of these evaluations will be informed by intelligence needs later in

future years but may explore particular investment themes (e.g. FE capital,

innovation infrastructure), specific geographies (e.g. investment in our priority

places). To enable us to undertake meta-evaluation studies, the LEP ensures there is

consistency in measuring success at individual project level.

Appendix 3 provides more detail on our evaluation approach.

8.

Programme of Evaluation

The Strategy Team maintains a programme of evaluation. This is a simple spreadsheet

which is completed by LEP exec staff responsible for monitoring project outputs and

ensuring evaluations are undertaken. The programme of evaluation is reviewed and updated

on a quarterly basis.

The programme of evaluation includes:

• An overview of what will be evaluated by when,

• Whether the evaluation will be conducted internally or externally commissioned,

• Whether the evaluation is a contractual requirements

• The chosen evaluation approach

• Who is leading the evaluation

• Who the evaluation will report back to

• The data being collected

The programme of evaluation can be found here: 2019-09-23 LEP Evaluation

programme.xlsx

8

Revised 01/11/2019

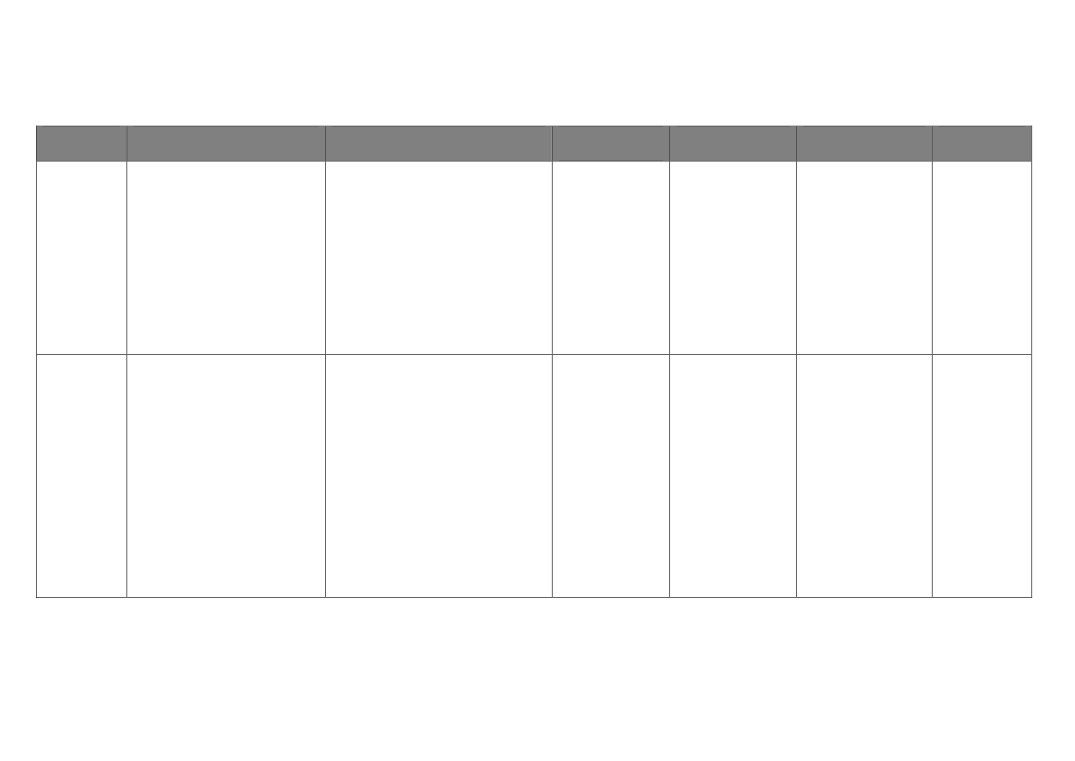

APPENDIX 1

Types of Evaluation

The following are the types of evaluation which can be used singularly, or combined, to assess the outcomes of

projects and programmes.

Type

Monitoring

Process Evaluation

Impact Evaluation

Economic

Evaluation

Purpose

To capture qualitative

To assess whether a

An objective test to

To compare the

and quantitative

programme or

assess what changes

benefits of the

information relating to

project is being

have occurred, and

programme /

inputs, activities,

implemented as

the extent to which

project with its

outputs at the individual

intended and what,

these can be

costs.

project or programme

in practice, is felt to

attributed to the

level.

be working more or

programme / project

less well, and why.

intervention.

What it

Whether it is on track to

How the programme

What difference the

Whether the

will tell

deliver on time / to

/ project was

programme / project

benefits justified

us

spend / expected

delivered.

made.

the costs.

outcomes.

When

Monitoring conducted

Variable - during or

Prioritised according

Annual - as part

monthly, quarterly or

soon after

to larger or

of review of

annually. In some cases,

implementation

innovative projects

Economic

ex post outcome

and programmes or

Strategy

evaluation will be

projects where

conducted

opportunities for

learning are greatest

Coverage

Monitoring coverage

Focused on key

Prioritised according

LEP-wide

will be universal

aspects of the

to larger or

delivery and

innovative projects

decision-making

and programmes or

infrastructure and

projects where

selected projects and

opportunities for

programmes

learning are greatest

9

Revised 01/11/2019

Appendix 2 - Planning an Evaluation

• What is it we want to achieve through the programme / project / intervention?

Defining the

objectives and

intended outcomes

• Who will be the main users of the findings and how will they be engaged?

Defining the

audience for the

evaluation

• What do we need to know about what difference the programme / project / intervention made, and/or how it was delivered?

Identifying the

• How broad is the scope of the evaluation?

evaluation objectives

and research

questions

• Is an impact, process or combined evaluation required?

• Is an economic evaluation required (i.e. to establish whether the benefits justified the cost)?

• How extensive is the evaluation likely to be?

Selecting the

• What level of robustness is required (i.e. strength of the evidence/fact or judgement/confidence level)?

evaluation approach

• What data is required?

• What is currently being measured / already being collected / available /

• What additional data needs to be collected?

• Who will be responsible for data collection and what processes need to be set up?

Identifying the data

requirements

• If the evaluation is assessing impact, at what point in time should the impact be measured?

• How large scale / high profile is the project / programme / intervention and what is a proportionate level of resource for the

evaluation?

• What is the best governance structure to have in place for the evaluation?

Identifying the

• Who is the project owner and who is providing the analytical support?

resources and

• Will the evaluation be externally commissioned or conducted in-house?

governance

• Is there any budget for this evaluation and is this compatible with the evaluation requirements? Has sufficient allowance been built in?

arrangements

• Who is responsible for developing the specification, tendering, project management and quality assurance?

• When does the primary data collection need to take place?

• Is piloting or testing of research methods needed?

Conducting the

• When will the evaluation start and end?

evaluation

• What will the findings be used for, and what decisions will they feed into?

• How will the findings be shared and disseminated?

Using and

disseminating the

evaluation findings

10

Revised 01/11/2019

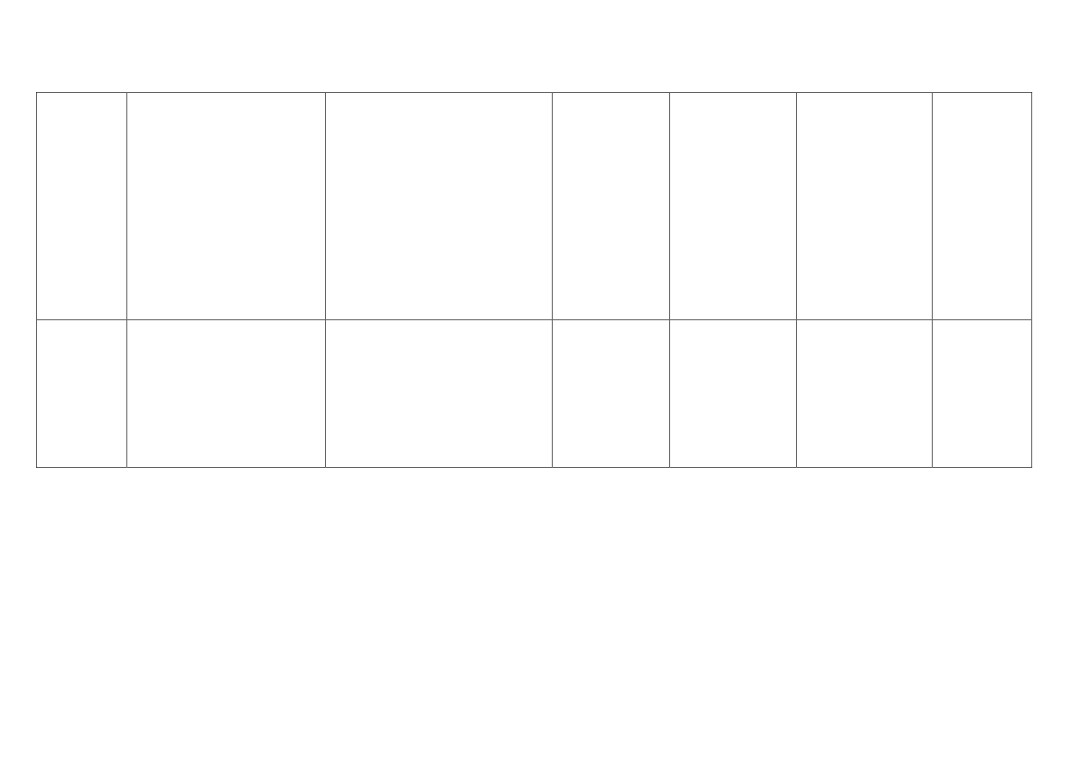

APPENDIX 3

What

Why

How

Cost, Funding

Owner

Dissemination

When

and Resources

Annual

•

Demonstrate progress

Review conducted by the Strategy

Chief Operating

AGM

Complete by

Strategic

against Economic Strategy

team.

Officer

Website

AGM - Sept

Review

ambitions

Newsletter

2019

•

Strategic Added Value

Views gathered by:

•

Investment achieved

• LEP Executive and local authority

•

Economic Performance and

partners

progress against economic

• Key external stakeholders

indicators

• The LEP Board

• The Economic Strategy Delivery

Board

• LEP Leadership team

Monitoring

•

Understand progress and

Detailed in our Evaluation

Head of

Data sent to

Quarterly/6

our

performance of

Programme.

Programmes

Government, LEP

monthly/

Investment

investments, identify

Board and

annually

Portfolio

slippage and risks to

Leadership Team

depending on

delivery; or to justify

agreed

reinvestment or resource

reporting

savings.

framework.

•

Collect data that will

support subsequent

evaluation / impact studies;

•

Report progress back to

Central Government /

funders.

11

Revised 01/11/2019

Evaluating

Individual projects will be

Appendix x provides a template for

Project Budget

Project sponsor

Dissemination: LEP

End of project

Individual

required to produce a post

individual projects to use in

leadership and

Investments

programme evaluation report

developing their M&E plans.

management groups

covering (as a minimum):

and on-line

• Expenditure

• Outputs

• Impact

• Lessons learnt (process

and impact)

• Assessment of success

(meeting original

objectives and tackling

problem)

Meta -

Strategic Evaluation Studies to:

Externally commissioned evaluation

Resources (tbc)

Head of Strategy

On website

As and when

Evaluation

• Understand the impact of a

studies.

Promoted directly

required

specific or group of projects;

to: Project sponsors,

• Learn lessons from

LEP Board and

pilot/exploratory projects;

leadership groups

• Build the evidence base on

what works and why.

12

Revised 01/11/2019